Quality Control and Data Assimilation

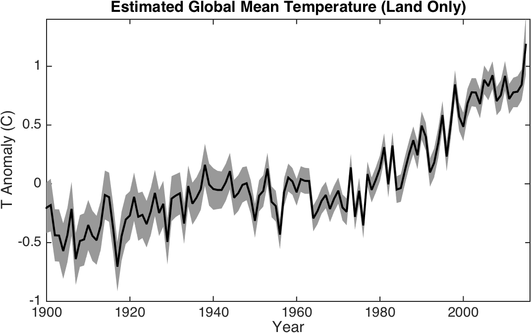

Much of what we know about climate change comes from studying the past. Historical temperature observations began in the late 1700s and have only been widespread since the late 1800s. In order to understand how much climate will change in the future it is important to know how much temperature has already changed in response to greenhouse gas emissions.

Annual estimates of global mean land temperature change since 1900, based on the NASA GISS dataset. Grey bands show an estimate of the 95% confidence interval.

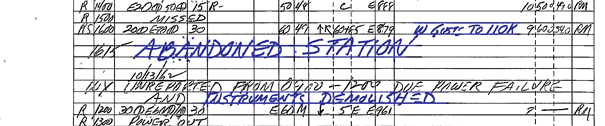

A major problem with historical observations is that they have been recorded using many different conventions, units, and types of equipment. Moreover, these changes often went unreported. In some cases this metadata has simply been lost over time, while in others it was not seen as important, perhaps because most historical observations were used purely for day-to-day weather reports as opposed to long term climate monitoring. Identifying and controlling for such changes is a task of first order importance for assessing past climate change.

Excerpt from a Weather Bureau Army Navy report from a station in Corvallis, Oregon on October 10th, 1962. The storm caused significant damage and disrupted an otherwise continuous climate record.

As luck would have it, differences in recording conventions related to units (Celsius vs. Fahrenheit) and precision (e.g., 1 C vs 1.0 C) leave subtle clues in the distribution of the data. We are able to invert these bits of information using an algorithm that we've termed precision-decoding. Much like accounting methods used for fraud detection, the algorithm is able to determine what rounding and unit conversion steps have most likely been applied to the archived observations. The algorithm, described in a paper now under review at the Quarterly Journal of the Royal Meteorological Society, finds numerous observing system changes that will help us to better reconstruct historical temperatures.